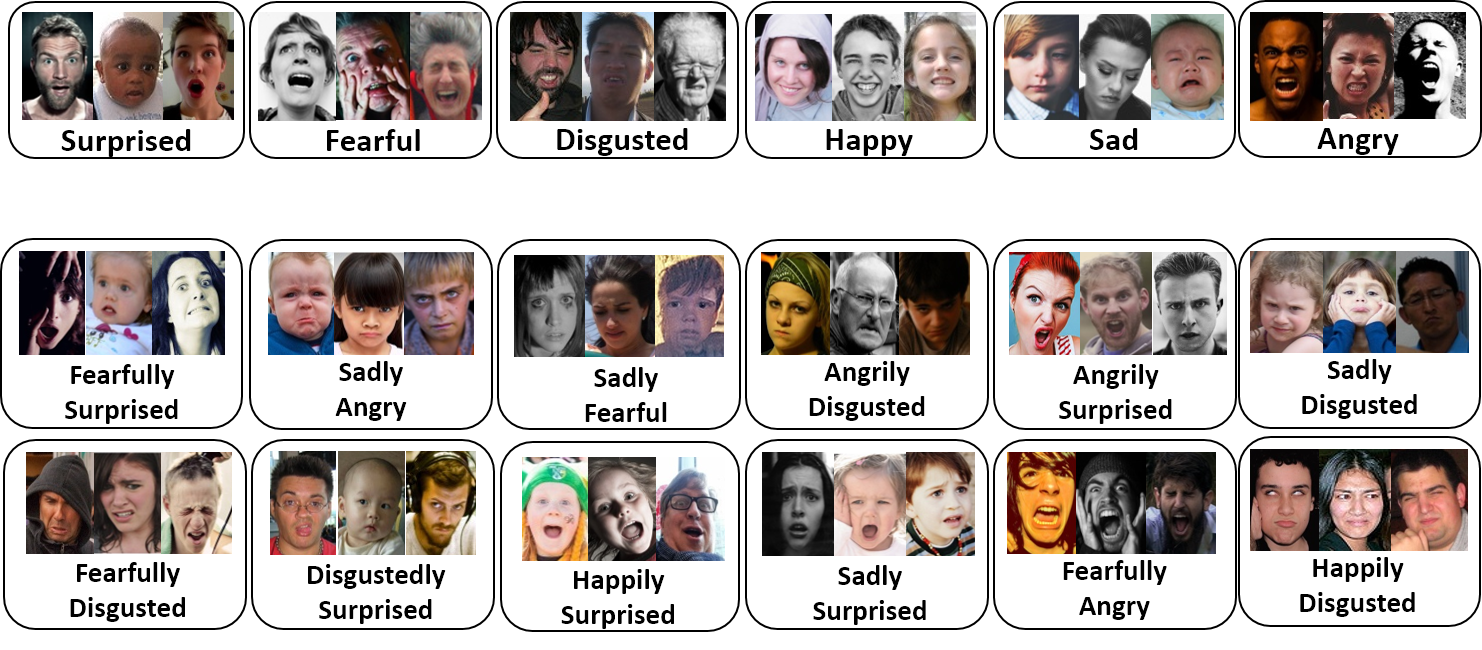

Real-world Affective Faces Database (RAF-DB) is a large-scale facial expression database with around

30K great-diverse facial images downloaded from the Internet. Based on the crowdsourcing annotation, each image has been independently labeled by about

40 annotators. Images in this database are of great variability in subjects' age, gender and ethnicity, head poses, lighting conditions, occlusions, (e.g. glasses, facial hair or self-occlusion), post-processing operations, etc.

RAF-DB has large diversities, large quantities, and rich annotations, including:

large number of real-world images;

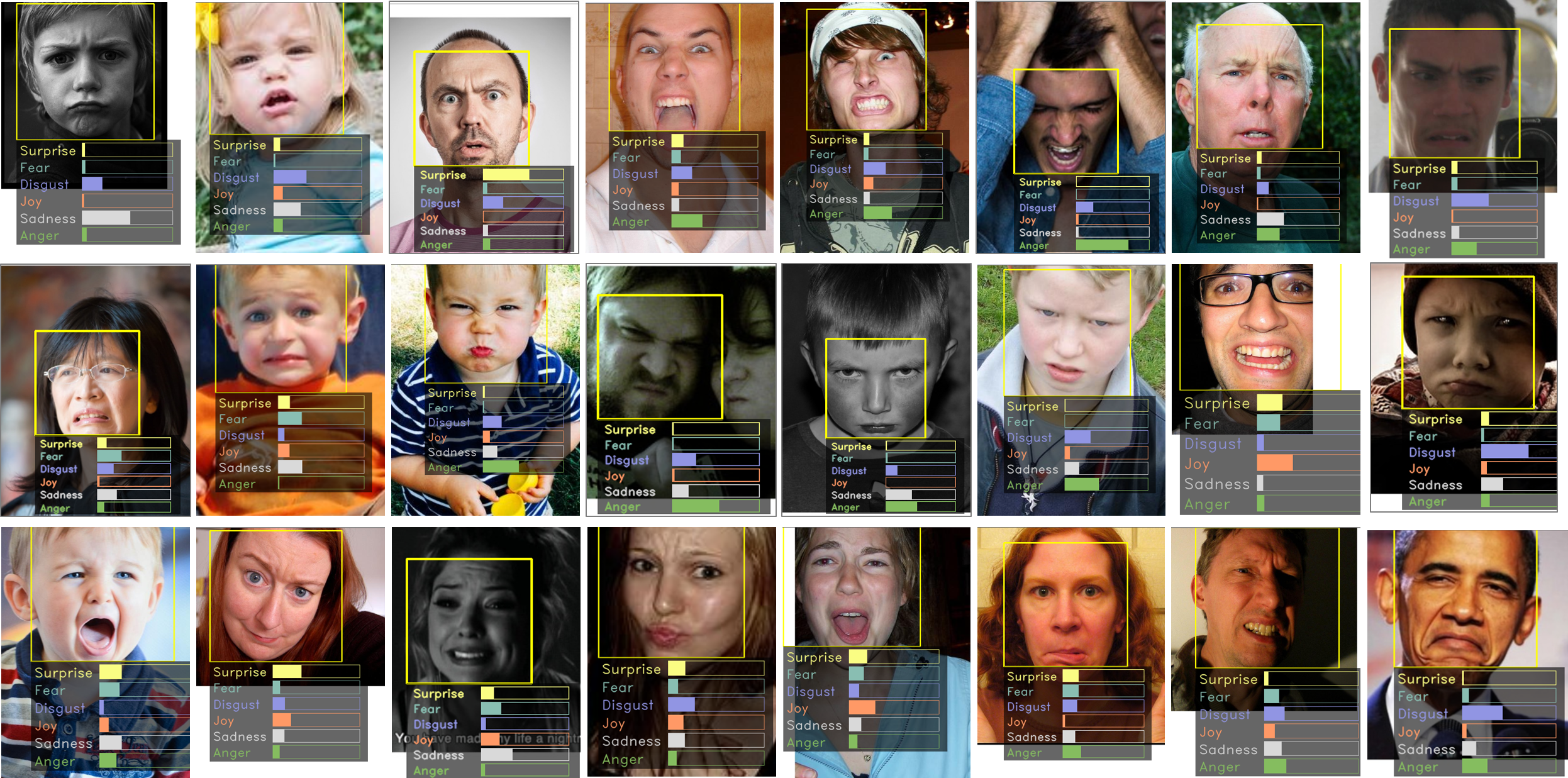

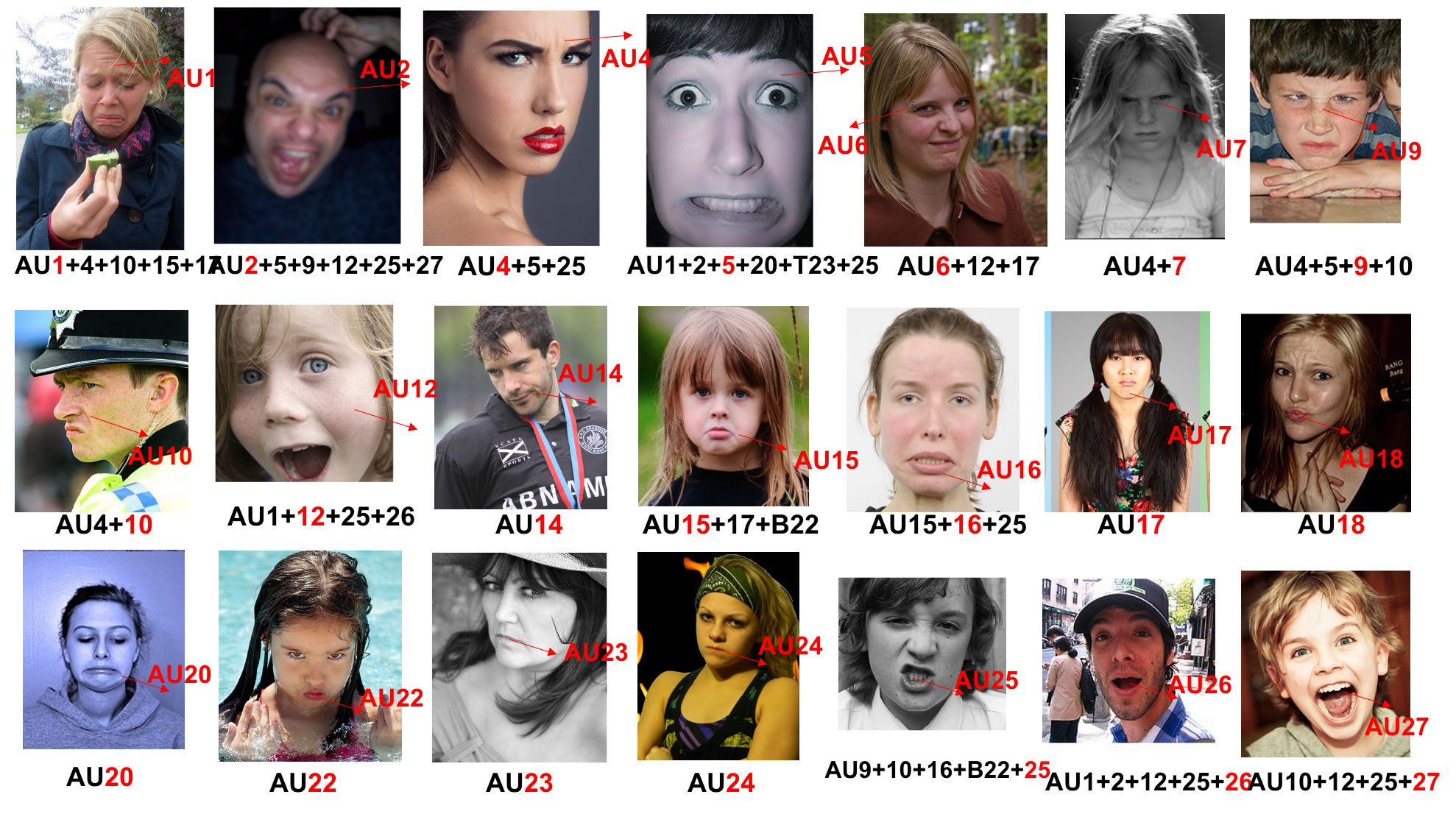

a 7-dimensional expression distribution vector for each image; two different subsets: single-label subset and two-tab subset; 5 accurate landmark locations, 37 automatic landmark locations, bounding box, race, age range and gender attributes annotations per image; baseline classifier outputs for basic emotions and compound emotions.