Real-world Affective Faces Database

Details

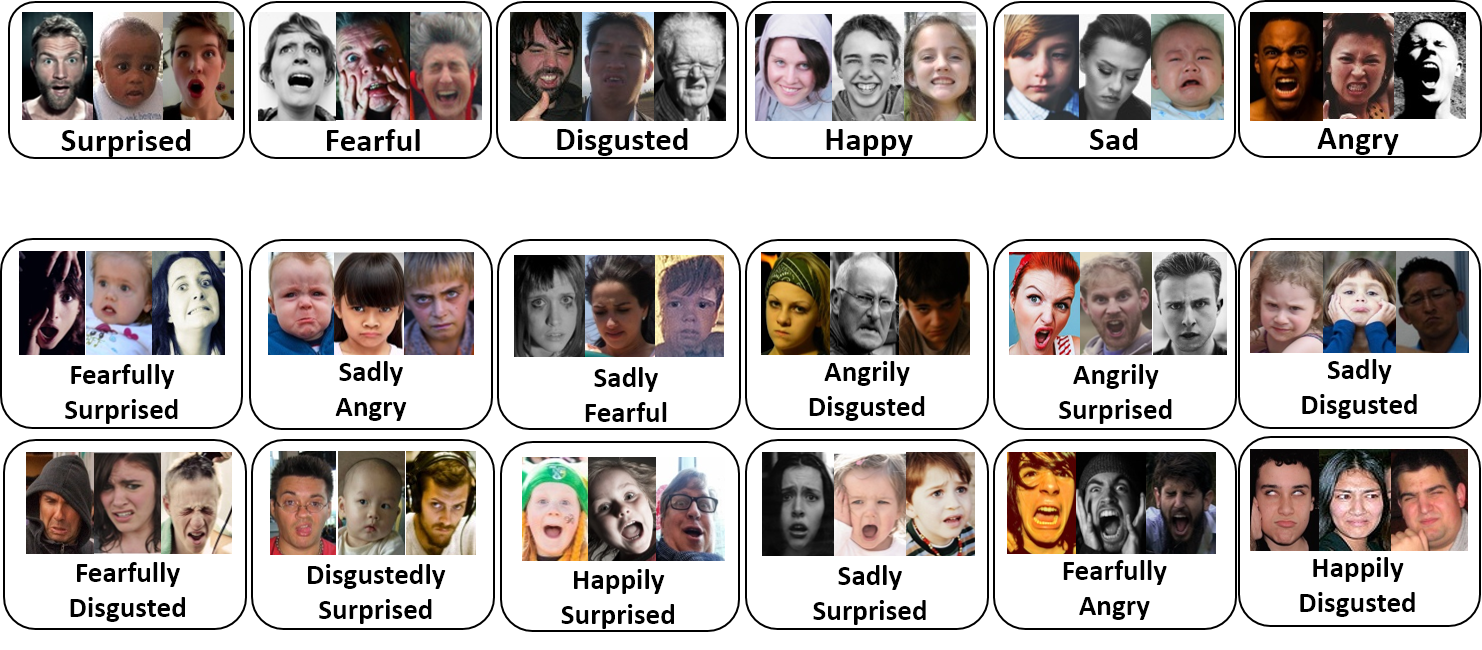

Real-world Affective Faces Database (RAF-DB) is a large-scale facial expression database with around 30K great-diverse facial images downloaded from the Internet. Based on the crowdsourcing annotation, each image has been independently labeled by about 40 annotators. Images in this database are of great variability in subjects' age, gender and ethnicity, head poses, lighting conditions, occlusions, (e.g. glasses, facial hair or self-occlusion), post-processing operations (e.g. various filters and special effects), etc. RAF-DB has large diversities, large quantities, and rich annotations, including:

29672 number of real-world images,

-

a 7-dimensional expression distribution vector for each image,

two different subsets: single-label subset, including 7 classes of basic emotions; two-tab subset, including 12 classes of compound emotions,

-

5 accurate landmark locations, 37 automatic landmark locations, bounding box, race, age range and gender attributes annotations per image,

baseline classifier outputs for basic emotions and compound emotions.

Sample Images

Terms & Conditions

The RAF database is available for non-commercial research purposes only.

All images of the RAF database are obtained from the Internet which are not property of PRIS, Beijing University of Posts and Telecommunications. The PRIS is not responsible for the content nor the meaning of these images.

You agree not to reproduce, duplicate, copy, sell, trade, resell or exploit for any commercial purposes, any portion of the images and any portion of derived data.

You agree not to further copy, publish or distribute any portion of the RAF database. Except, for internal use at a single site within the same organization it is allowed to make copies of the dataset.

The PRIS reserves the right to terminate your access to the RAF database at any time.

How to get the Password

This database is publicly available. It is free for professors and researcher scientists affiliated to a University.

Permission to use but not reproduce or distribute the RAF database is granted to all researchers given that the following steps are properly followed:

Send an e-mail to Shan Li (email1) before downloading the database. You will need a password to access the files of this database. Your Email MUST be set from a valid University account and MUST include the following text:

Subject: Application to download the RAF Face Database Name: <your first and last name> Affiliation: <University where you work> Department: <your department> Position: <your job title> Email: <must be the email at the above mentioned institution>

I have read and agree to the terms and conditions specified in the RAF face database webpage. This database will only be used for research purposes. I will not make any part of this database available to a third party. I'll not sell any part of this database or make any profit from its use.

Content Preview

-

Single-label Subset (Basic emotions)

Image Annotation Emotion Label READ ME

-

Two-tab Subset (Compound emotions)

Image Annotation Emotion Label READ ME

For more details of the dataset, please refer to the paper "Reliable Crowdsourcing and Deep Locality-Preserving Learning for Expression Recognition in the Wild".

* Please note that the RAF database is partially public. And the other 10k images are neither basic nor compound emotions which will be released afterwards.

Code

We have uploaded the configuration parameters of the DLP-CNN and the hyper-parameters of the trianing process.You can download it from here.

Citation

@inproceedings{li2017reliable,

title={Reliable Crowdsourcing and Deep Locality-Preserving Learning for Expression Recognition in the Wild},

author={Li, Shan and Deng, Weihong and Du, JunPing},

booktitle={2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

pages={2584--2593},

year={2017},

organization={IEEE}

}

@article{li2019reliable,

title={Reliable Crowdsourcing and Deep Locality-Preserving Learning for Unconstrained Facial Expression Recognition},

author={Li, Shan and Deng, Weihong},

journal={IEEE Transactions on Image Processing},

volume={28},

number={1},

pages={356--370},

year={2019},

publisher={IEEE}

}