Ethnicity Aware Training Datasets

INTRODUCTION

A major driver of bias in face recognition, as well as other AI tasks, is the training data. Deep face recognition networks are often trained on large-scale training datasets, such as CASIA-WebFace, VGGFace2 and MSCeleb-1M, which all contain racial bias. Thus, social awareness must be brought to the building of datasets for training. Here we provide four training datasets, i.e. BUPT-Balancedface, BUPT-Globalface, BUPT-Transferface and MS1M_wo_RFW, for studying facial bias and achieving fair performance.

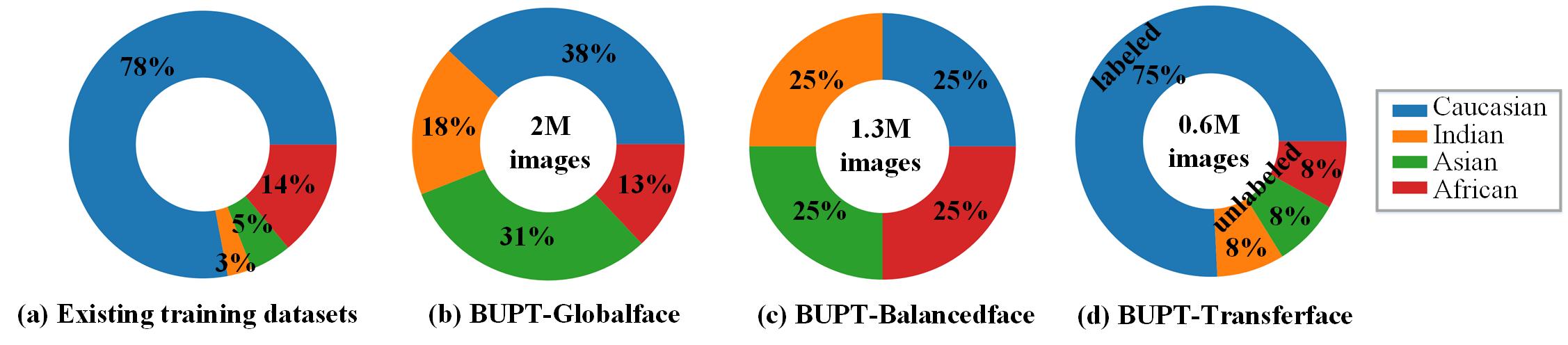

The percentage of different races in commonly-used training datasets, BUPT-Globalface and BUPT-Balancedface dataset.

Ⅰ. BUPT-BALANCEDFACE

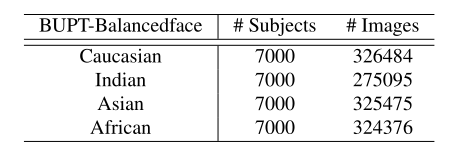

In order to remove this source of bias, we construct BUPT-Balancedface (Equalizedface) which contains 1.3M images from 28K celebrities and is approximately race-balanced with 7K identities per race. The images are selected from MS-Celeb-1M or directly downloaded from websites according to “Nationality” attribute of FreeBase celebrities or Face++ API.

The number of identities and images in BUPT-Balancedface.

Ⅱ. BUPT-GLOBALFACE

In existing training datasets, some race groups are over-represented and others are under-represented. In order to represent people of different regions equally, we construct BUPT-Globalface (Worldface) which contains 2M images from 38K celebrities in total. Its racial distribution is approximately the same as real distribution of world’s population.

The example images in our BUPT-Globalface dataset.

Ⅲ. BUPT-TRANSFERFACE

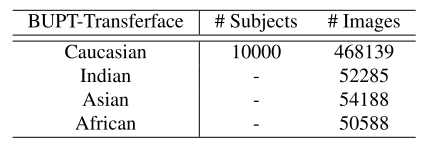

Unsupervised domain adaptation (UDA) is one of the promising methodologies to address algorithm biases. Therefore, we provide a training dataset, i.e. BUPT-Transferface, under UDA setting for mitigating racial bias. We provide race label for each image but only provide identity label for Caucasians. You can use labeled images of Caucasians as source domain, and use unlabeled images of non-Caucasians as target domain.

• For labeled data, each identity is named as '< freebaseID >' provided by freebase, e.g. 'm.0xnkj'. Each face image has unique name, e.g. 'm.0xnkj/m.0xnkj_00000.jpg' where 'm.0xnkj_00000.jpg' is named in a way '< identityID >_< faceID >.jpg'.

• For unlabeled data, each face image also has unique name, e.g. 'asian/asian_00000.jpg' where 'asian_00000.jpg' is named in a way '< race >_< faceID >.jpg'.

The number of identities and images in BUPT-Transferface.

Ⅳ. MS1M_WO_RFW

To develop fair technology across different races, we recommend using our testing dataset, i.e. RFW, to evaluate the face-recognition performance. However, there are overlaps between RFW and commonly used training dataset, i.e. MS-Celeb-1M, it is inconvenient to use it for training. So we remove their overlapping identities and release the remaining images of MS1M, i.e. MS1M_wo_RFW, for large-scale training. If you have the origin version of MS-Celeb-1M, you can directly select non-overlapping images from MS1M according to the index file, i.e. MS1M_wo_RFW_index.zip .

DOWNLOAD

These four training datasets are available for non-commercial research purposes only. A complete version of the license can be found here. Permission to use but not reproduce or distribute these databases is granted to all researchers given that the following steps are properly followed:

Send an e-mail to Ms. Mei Wang (wangmei1@bnu.edu.cn) before downloading the database. You will need a password to access the files of this database. We will check the e-mail twice per week. Your Email MUST be set from a valid offical (University or Company) account and MUST include the following text:

Subject: Application to download the Ethnicity-Aware Face Databases

Name: (your first and last name)

Affiliation: (University or Company where you work)

Department: (your department)

Position: (your job title)

Email: (must be the email at the above mentioned institution)

I have read and agree to the terms and conditions specified in the Ethnicity-Aware face database webpage.

Thess databases will only be used for research purposes.

I will not make any part of these databases available to a third party.

I'll not sell any part of this database or make any profit from their use.

If dropbox public links are temporarily suspended for generating excessive traffic, please try again several days later.

PUBLICATIONS

Please cite the following if you make use of the dataset.

[1] Mei Wang, Weihong Deng, Jiani Hu, Xunqiang Tao, Yaohai Huang. Racial Faces in the Wild: Reducing Racial Bias by Information Maximization Adaptation Network. ICCV2019.

[2] Mei Wang, Yaobin Zhang, Weihong Deng. Meta Balanced Network for Fair Face Recognition. TPAMI 2021.

[3] Mei Wang, Weihong Deng. Mitigating Bias in Face Recognition using Skewness-Aware Reinforcement Learning. CVPR2020.

[4] Mei Wang, Weihong Deng. Deep face recognition: A Survey. Neurocomputing.

Contact Us

Please contact Mei Wang and Weihong Deng for questions about the database.